|

In an Inferential Statistics test, a Beta Error is a "False Negative" (in contrast to an Alpha Error, which is a "False Positive"). Power is the Probability of not making a Beta Error. It is the Probability of correctly concluding that there is not a Statistically Significant difference, change, or effect. Put another way, Power is the Probability of accepting (Failing to Reject) the Null Hypothesis, when the Null Hypothesis is true.

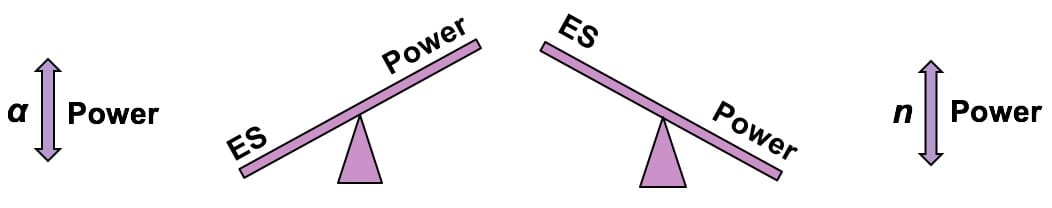

Power is directly affected by Alpha, the Level of Significance, and n, the Sample Size. If we want to increase the Power of a test, the best way is to increase the Sample Size, n. We could also increase Alpha. Alpha is the value we select as the maximum Probability of an Alpha Error which we will tolerate and still call the results "Statistically Significant". So, if we're willing to tolerate a higher Probability of an Alpha Error, we can reduce the Probability of a Beta Error. This is illustrated in my blog post on the Alpha Error and Beta Error see-saws. As the two see-saws in the middle of the graphic above demonstrate, Power has an inverse relationship with Effect Size (ES). If we want our test to be able to detect small Effect Sizes, then we need to have a high value for Power. As we just said, we can get this by increasing the Sample Size, n, or increasing our tolerance for an Alpha Error. The 2nd see-saw above shows that if we have low Power, then the detectable Effect Size will be high.

6 Comments

Rob R

1/4/2017 05:39:17 am

You've accidentally mixed up the definitions of alpha and beta error. Alpha error is a false positive, while beta error is a false negative.

Reply

1/4/2017 11:46:14 am

I understand that Alpha Error is a False Positive and Beta Error is a False Negative, but I haven't mixed them up. Alpha Error is the opposite of Beta Error. And Power is the opposite of Beta. Power is defined as 100% - Beta, where Beta is the Probability of a Beta Error.

Reply

Rob R

1/4/2017 05:07:24 pm

Thanks for the response. I understand everything that you've said, but the first line in your article states, "In an Inferential Statistics test, a Beta Error is a "False Positive" (in contrast to an Alpha Error, which is a "False Negative").". Now in reply to my comment you state, "...Alpha Error is a False Positive and Beta Error is a False Negative...". I'm not trying to be difficult, but these two statements contradict each other. Am I missing something here? 1/5/2017 04:49:13 am

You're not being difficult; you're being very helpful. I had glossed right over that. Of course, it needed to be changed, so I just changed it. Thanks.

Reply

Rob R

1/5/2017 05:08:35 pm

Thank you for the clarification. I find your book, blog, and videos to be very useful. Regards.

Reply

1/7/2017 02:45:35 pm

That's great to hear. I'm curious: how did you find out about the book?

Reply

Leave a Reply. |

AuthorAndrew A. (Andy) Jawlik is the author of the book, Statistics from A to Z -- Confusing Concepts Clarified, published by Wiley. Archives

March 2021

Categories |

RSS Feed

RSS Feed